Whether LLMs can "understand" and introducing a smarter way to chunk PDFs

Plus LangChain zoom camp recording, industry news, and a reader question gets answered

What’s up, everyone!

Thank you to everyone who joined the LangChain ZoomCamp on Friday.

Don't worry if you missed it; I’ve got the recording for you. I’ll stick to sending the links directly to the Zoom recordings; here’s the link to the Zoom recording where you can watch or download the videos.

I went off script for this session.

I asked the participants if we should try to hack on something LIVE and unscripted, and most folks were down for it! The notebook is on GitHub, and we discussed more than the notebook reflects.

I enjoyed this session, and thanks for letting me go off-script!

📚 For those interested, I've introduced a new course on deep learning for image classification via the LinkedIn Learning platform.

Over 800 people have taken the course in the two weeks since its launch, which is insane to me!

If you don’t have a LinkedIn Learning subscription, you can purchase the course outright for $45.

✨ Blog of the Week

This week’s pick is an article by Devansh fromArtificial Intelligence Made Simple.

In it, he boldly addresses the controversial question of whether LLMs truly understand language. The piece revolves around defining "understanding" and the evaluation of LLMs based on this definition. He introduces some frameworks, including Bloom’s Taxonomy, that are explored to assess understanding. He concludes that while LLMs exhibit some understanding characteristics, they fall short in areas like generalization, abstraction, and self-improvement.

Here are some of the key points I picked out from his article:

Understanding vs. Performance: Just because LLMs flex their muscles in language tasks doesn't mean they've cracked the code of "understanding" language like us humans. It's like that friend who's great at trivia nights but can't explain why the sky is blue. Maybe these LLMs are good at spotting patterns from the petabytes of data they've seen and not really "getting" it. The real question is: What's "understanding" anyway? Sure, LLMs can whip up some snazzy text, but can they think out of the box like us?

Abstraction in LLMs: Abstraction is like the art of capturing the soul of an idea. Take the number "5" - it's not just a number; it's a symbol we use to count stuff. Devansh throws some shade at LLMs, suggesting they might not be the Picasso of abstract thinking. Like, he tried making LLMs recreate a debate between Socrates and Diogenes using the Socratic method, and boy, did it miss the mark!

Self-improvement in AI: Dreaming of AI models that can level up on their own? Hold your horses! Devansh kinda bursts that bubble. It's like expecting your pet rock to learn new tricks. Research says LLMs aren't great at self-reflection. Train them on their own stuff, and they might just get a bit too self-obsessed, amplifying their quirks and maybe even getting amnesia about the original content.

Devansh's writing style is informal, engaging, and peppered with humour. He effectively balances deep technical insights with light-hearted commentary, making the content informative and entertaining. Read the full piece here.

Get a shirt, and support the newsletter.

I keep all my content freely available by partnering with brands for sponsorships.

Lately, the pipeline for sponsorships has been a bit dry, so I launched a t-shirt line to gain community support.

You can check out my designs here and explore the excellent product descriptions I generated using GPT-4!

🛠️ GitHub Gem

A huge pain point for Retrieval Augmented Generation is the challenge of making the text in large documents, especially PDFs, available for LLMs due to the limitations of the LLM context window.

You could naively chunk your documents - a straightforward method of breaking down large documents into smaller text chunks without considering the document's inherent structure or layout.

Going this route, you end up dividing the text based on a predetermined size or word count, such as fitting within the LLM context window (typically 2000-3000 words). The problem is that you can disrupt the semantics and context implied by the document's structure.

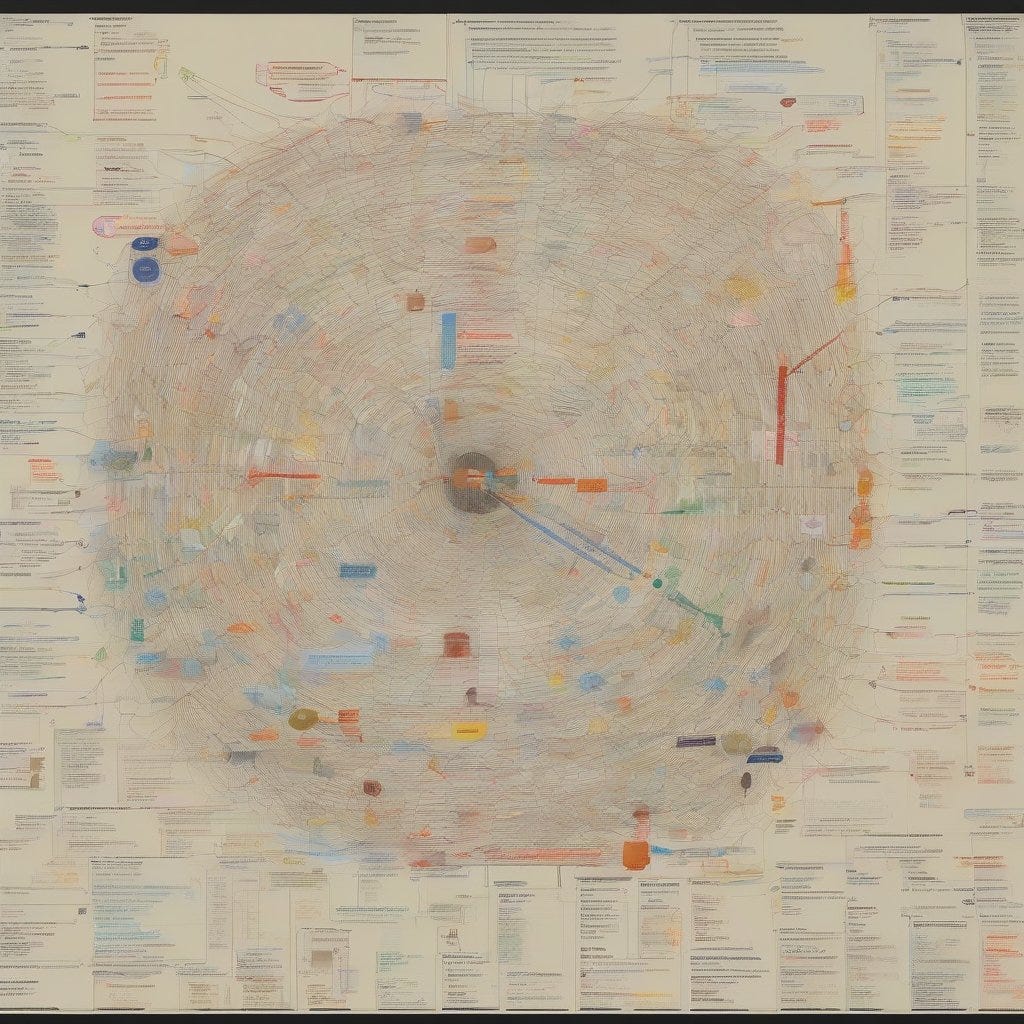

proposes a solution called "smart chunking" that is layout-aware and considers the document's structure.This method:

Is aware of the document's layout structure, preserving the semantics and context.

Identifies and retains sections, subsections, and their nesting structures.

Merges lines into coherent paragraphs and maintains connections between sections and paragraphs.

Preserves table layouts, headers, subheaders, and list structures.

To this end, he’s created the LlamaSherpa library, which has a "LayoutPDFReader," a tool designed to split text in PDFs into these layout-aware chunks, providing a more context-rich input for LLMs and enhancing their performance on large documents.

I’ve created a getting started notebook introducing the library, its components, and how to use it. Check it out here and get hands-on.

📰 Industry Pulse

🤖 How is generative AI revolutionizing the field of robotics? The article from VentureBeat discusses the significant advancements in robotics, primarily driven by the rapid progression in generative artificial intelligence. Leading tech companies and research labs are leveraging generative AI models to overcome significant challenges in robotics, which have so far hindered their widespread deployment outside of heavy industry and research labs.

🤔 As generative AI continues to make robots more intelligent and capable, how will this impact our everyday lives? Will we soon see robots seamlessly integrated into our daily routines?

🤖 Is the AI boom in Silicon Valley reshaping the global investment landscape? Silicon Valley is witnessing a significant surge in investments toward artificial intelligence (AI) startups, with funds reaching $17.9 billion in the third quarter. Despite a global drop in overall startup deals, AI firms have seen a 27% increase in funding compared to last year. This growth is primarily attributed to the increasing reliance on automation and advanced technology across various industries, particularly during the pandemic.

🔍 With the AI boom in full swing, how will this impact the future of business and technology? Will the challenges AI startups face hinder this growth, or will it lead to more innovative solutions?

🤖 Is the narrative that "generative AI is a job killer" really accurate? A recent report by employment site Indeed suggests that the fear of AI taking over jobs might be overblown. The study, which analyzed over 15 million job postings, found that AI is more likely to change jobs rather than eliminate them.

🤔 So, is AI a job killer or a job changer? And how can we prepare ourselves for this shift?

🤖 Is AI adoption as widespread as it seems? A recent study by a group of economists reveals that despite the hype, AI adoption is not as widespread as it might seem. The study, which analyzed government data from a 2018 survey of 474,000 firms, found that less than 6% of businesses were using AI technologies. However, the study also noted a significant rise in AI adoption since then, with a recent McKinsey study revealing that 79% of respondents had some exposure to AI.

🧐 So, is your business part of the AI revolution or still on the sidelines?

💡 Your Questions, Answered

I opened a chat last week and asked what you’d like to learn about. And Jeff suggested an excellent topic.

Base, Instruction-tuned, and Chat-tuned LLMs represent different stages or configurations in the tuning of large language models to perform specific tasks or engage in particular types of interactions:

Base LLMs

Base LLMs are essentially the starting point in the process.

They are designed to predict the next word in a sequence based on the training data they have been exposed to. However, they are not tailored to answer questions, carry out conversations, or solve problems. For instance, if given a prompt like “In this book about LLMs, we will discuss,” a Base LLM might complete the sentence in a way related to the topic but won't answer a specific question or engage in a dialogue.

Instruction-tuned LLMs

Instruction-tuned LLMs are developed from Base LLMs through further training and fine-tuning to follow specific instructions provided in the input rather than merely predicting the next word in a sequence.

They are specialized in answering questions or executing commands. For instance, they can respond appropriately to prompts like “Write me a story” or “What is the capital of France?”.

To create an Instruction-tuned LLM, a Base LLM is further trained using a large dataset covering sample instructions and the expected responses. This further training helps the model to understand and execute instructions provided in the input.

Chat-tuned LLMs

Chat-tuned LLMs are specialized in engaging in dialogues, typically between human and AI entities.

They are fine-tuned to participate in back-and-forth interactions, which is a progression from the instruction-tuned models that are more focused on responding to individual prompts or commands.

This structured tuning process, moving from Base LLMs to Instruction-tuned and then to Chat-tuned LLMs, allows for the creation of models that are increasingly adept at handling interactive and nuanced human language inputs.

🔍 Research Refined

This week’s paper may look familiar if you looked at the LlamaSherpa demo notebook earlier. It’s "Which Prompts Make The Difference? Data Prioritization For Efficient Human LLM Evaluation."

Problem: The paper highlights the challenge of evaluating large language models (LLMs) using human evaluations. Given the resource-intensive nature of human evaluations, there's a need to identify which data instances (prompts) are most effective in distinguishing between models.

Research Question: The central research question is whether it's feasible to minimize human-in-the-loop feedback by prioritizing data instances that most effectively distinguish between models.

Key Findings:

Human evaluations capture linguistic nuances and user preferences more accurately than traditional automated metrics.

The authors evaluated various metric-based methods to enhance the efficiency of human evaluations.

These metrics reduce the required annotations, saving time and costs while ensuring a robust performance evaluation.

Their method was effective across different model families. Focusing on the top-20 percentile of prioritized instances, they observed reduced indecisive ("tie") outcomes by up to 54% compared to random samples.

Implication: The potential reduction in required human effort positions their approach as a valuable strategy for future evaluations of large language models.

That’s it for this one.

See you next week, and if there’s anything you want me to cover or have any feedback, shoot me an email.

Cheers,